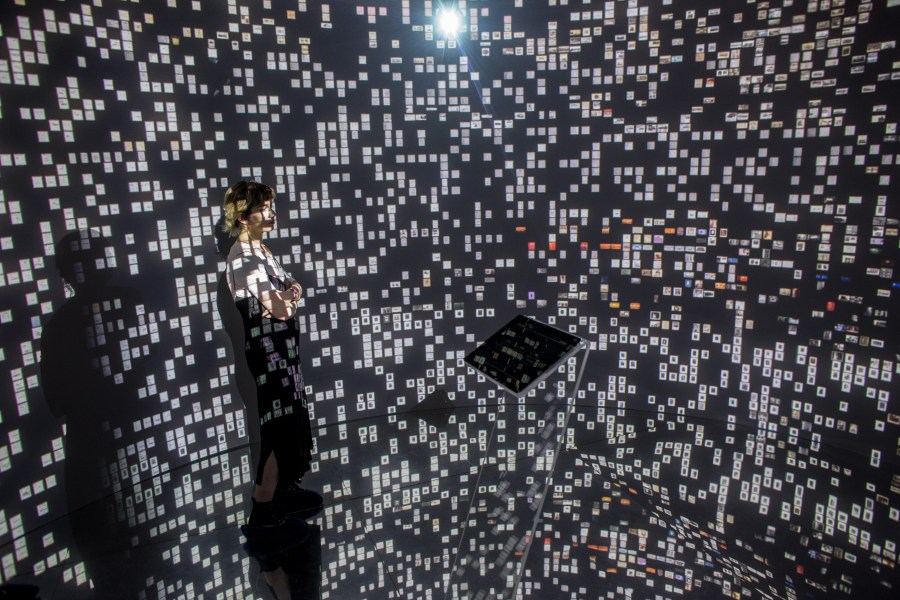

Generative AI: What’s all the hype about?

The new wave of generative artificial intelligence, like ChatGPT and DALL-E, has got the tech business in a frenzy.

Venture capitalists are pouring money into AI startups: Investments in generative AI have already exceeded $2 billion. But there are lots of unknown unknowns about the innovation. There’s virtually no oversight from the government, and teachers, artists, researchers and others are raising concerns.

“There’s so much happening under the hood that we don’t get access to … there needs to be much more transparency,” said Alex Hanna, director of research at the Distributed AI Research Institute.

On the show today: why AI is getting so much attention these days, ethical issues with the tech and what lawmakers should focus on when trying to regulate it. Plus, why some say it could exacerbate the climate crisis.

In the News Fix, some Kia and Hyundai cars keep getting stolen, and insurance companies are taking notice. Plus, we might spend the most on health care, but health in the United States falls behind other high-income countries by several measures. And, why you might want to get ready for inflation whiplash.

Later, we’ll hear from a listener who’s pro-ChatGPT when it comes to writing cover letters. And in the spirit of Dry January, Elva Ramirez, author of “Zero Proof: 90 Non-Alcoholic Recipes for Mindful Drinking,” gives us a little history lesson on mocktails (or cleverages).

Here’s everything we talked about today:

- “Investors seek to profit from groundbreaking ‘generative AI’ start-ups” from Financial Times

- Alex Hanna’s “Mystery AI Hype Theater 3000” streamed weekly on Twitch

- “AI rockets ahead in vacuum of U.S. regulation” from Axios

- “Robots trained on AI exhibited racist and sexist behavior” from The Washington Post

- “What jobs are affected by AI? Better-paid, better-educated workers face the most exposure” from the Brookings Institution

- “Yellen Sees Low Inflation as More Likely Long-Term Challenge” from Bloomberg

- “State Farm says it has stopped insuring some Kia, Hyundai vehicles” from CBS News

- “Health care spending in the US is nearly double that of other wealthy nations: report” from The Hill

We want to hear your answer to the Make Me Smart question. Leave us a voice message at 508-U-B-SMART, and your submission may be featured in a future episode.

Make Me Smart February 1, 2023 Transcript

Note: Marketplace podcasts are meant to be heard, with emphasis, tone and audio elements a transcript can’t capture. Transcripts are generated using a combination of automated software and human transcribers, and may contain errors. Please check the corresponding audio before quoting it.

Kai Ryssdal

Hey everybody, I’m Kai Ryssdal. Welcome back to Make Me Smart, where none of us is as smart as all of us.

Kimberly Adams

And I’m Kimberly Adams, thank you for joining us on this Tuesday. And because it is Tuesday, that means it’s time to do a deep dive into a single topic. And today we’re going to all get smarter together about the thing that so many of us have been talking about and experimenting with and all the other things: Chat-GPT and the new wave of generative AI.

Kai Ryssdal

There is oh so much hype about these systems. A lot of money as well. So we’re going to talk about what it might mean for us both what we know about it now and what, honestly, to get REM Rumsfeld-y here, the “unknown unknowns”, because, you know, for some, it’s a little bit scary. For some, it’s an opportunity, and we’re going to talk about all that stuff.

Kimberly Adams

Yes. And here to help us understand this as Alex Hanna, director of research, at the Distributed AI Research Institute. Welcome to the show.

Alex Hanna

Hey, thanks for having me.

Kimberly Adams

So first of all, can you explain what generative AI is exactly? And how it’s different from sort of other artificial intelligence tools that people might already be familiar with?

Alex Hanna

Yeah, totally. So there’s kind of two classes of AI tools, there’s AI that’s used to classify different types of things. So for instance, businesses will often use things like churn models to predict when you know, how customers are going to return, how likely they are to return. Generative AI, works differently. It takes some kind of an input, and it generates some kind of a novel output. So instead of doing some kind of classification, or task where it’s a generating a number, or predicting a number, it’s generating something like text, or images, or you know, all these things around art and essays into things that we now know, is generative AI.

Kai Ryssdal

Why then, alright, so look. We’ve known this is coming, we’ve been using AI in one form or another for a while now, right? Even if we don’t always know it. Why do you think now everybody’s like, “Oh, my God, it’s AI,” you know?

Alex Hanna

Well, because now it looks like the things of our science fiction, dreams, or nightmares in some other cases, right? And, you know, these kinds of things, we have all these visions of what, what an AI system can look like, we have all these science fiction models of, of how, or…. the computer system in the Star Trek where you make an inquiry, and it gives you some kind of an informative answer. And so it looks like that. It also looks pretty believable. It looks like we can’t distinguish between what is human made, and computer made? There’s this, this old tests, which is not really very rigorous. But it’s called the Turing Test named after the computer scientist, Alan Turing. And you know, that that effectively says, “Well, if a human can’t distinguish between computer generated output, and a human generated output, then it passes the Turing Test”. So we’re getting to this point where these things which look impossibly complex, look very artistic, look very nuanced. We really are having a harder time telling the differences between if this is human made, or is this computer made. So I think that’s why we’re getting really into this hype cycle right now.

Kimberly Adams

So basically, because we’re scared now.

Alex Hanna

Fear, I think, is one reaction. Excitement is another reaction, or if you’re like, me, and some of my colleagues… may be kind of rolling your eyes strongly. It’s another reaction.

Kimberly Adams

Wait. Why the strong eye roll?

Kai Ryssdal

Say more about that

Alex Hanna

The strong eye role, because I think we really have to get under what these systems are doing, to really, you know, to get a sense of, of, of how they’re doing it and, and why they’re producing this output. So there’s excitement on one hand from a lot of the people who create this technology. And there’s a lot of fear from people who either may be subject to this technology or who are, let’s say, teachers, and artists, but when you step back and kind of see what’s actually happening under the hood… yeah, there’s a lot of math and I don’t want to undersell the engineering that’s involved in this, it’s kind of a massive amount of engineering. At the same time, the sources that are being used to train these models in AI, we say that it kind of learns. You know, there’s a lot in that metaphor, but it learns from all these past data. But we don’t know where a lot of these data come from, or the data are explicitly from particular people and particular artists. So I know there’s at least one lawsuit in the works. Some artists have been organizing a suit, because people will give prompts to tools like Midjourney, or Stable Diffusion and they’ll say, “I would like to paint a picture of a dragon in the style of”, you know, “whoever this famous dragon creator is, or dragon Illustrator is”. It illustrates it very much in that style. So it’s doing a lot of, frankly, stealing of a lot of these prior works.

Kai Ryssdal

Sorry, something you said triggered a question to me. And that was the engineering. Is is AI, Chat-GPT specifically, but all the rest of them as well. Is it hard engineering?

Alex Hanna

It’s hard insofar as you’re building something that has billions of what are called parameters. They basically have to learn all these numerical representations of these images or these texts. And so it’s difficult engineering, merely on a scale nature of these and that’s why a lot of the companies that are doing this, they are either large companies like Google, or, Microsoft, or they’re like Open AI, which is smaller, but it has sponsorship from Microsoft. So they are pretty large engineering feats. But also, a lot of it is scaling these things up from kind of smaller tests, you know, testing and finding out how to do this in a kind of a more efficient, more cost effective manner across, you know, hundreds, if not thousands, of of computers and specialized hardware.

Kimberly Adams

Well, and also in an ethical way, which I don’t have to tell you because you used to work for Google’s ethical AI team and left the company along with some others, because you all said you didn’t believe the company was doing enough to mitigate some of the harms that its products were having on on marginalized communities. When you think about the fact that this technology is out in the wild now, and when it comes to this widespread use of generative AI like Chat-GPT, or DALL-E, what are you most concerned about right now?

Alex Hanna

So the first thing I’m thinking about is that there is so much capital, so much money in this generative AI game now. I think I saw a report something like 30 engineers, and research scientists that were at Google’s machine learning lab, Google Brain, I want to say that I think 30 engineers have left in the past year alone. To go ahead and work at these large language, large image generation startups. That’s a huge brain drain if you’re thinking about an organization that is, I’d say half the people in the organization are engineers or research scientists. And it’s also distracting from what could be more responsible development of AI technologies, focusing more on governance focusing more on auditing focusing more on fairness and ethics of this work and building in those guardrails. We know that this regulation is coming both in in the EU with the “AI Act” as well as a few bills kicking around the US Congress. And so focusing on making those…. Making these things work better for people is getting short shrift. Now, the other two things I’m thinking about are one, the outputs of these, the outputs of these are pretty narrow, especially if you’re thinking about language, the languages that they’re serving are going to be those languages which already have an immense amount of representation in the, in the in the public Internet. So that’s going to look like English mostly. You’re not going to get technologies that work for people whose speak you Xhosa in South Africa, or Amharic in Ethiopia or Egyptian Arabic, if I mean if we’re being sort of cutting close to home, which is, you know what, what my parents speak. I mean, you’re not, you’re not actually getting getting that representation. The third thing is the externalities of this. Sam Altman, who is actually one of the VCs involved in Open AI has said something of the nature of “these things are emitting wild amounts of carbon.” These things are just carbon machines. So I’d love to see if there’s any intrepid listeners out there, if someone could actually estimate, you know, based on available data, how much carbon is emitted from a single query, to Chat-GPT. And I really actually haven’t seen anything of that nature. So that’s, you know, that’s a summation of kind of all the worries that I have with these things, both in how it attracts attention, how it attracts capital, how it is harming people that already don’t have access, or technologies that are serving them and just exacerbating the climate catastrophe.

Kai Ryssdal

So just as a as a way to sort of bring this sort of full circle here to our daily lives and where we go from here, what what do you imagine Alex, the regulatory environment to be. Who’s going to regulate it? Should it be… Should it be regulated? How? I mean, the government does not have a great track record, certainly in the recent decade or two of regulating technology. How do you suppose it happens here, if it needs to happen at all?

Alex Hanna

It certainly doesn’t have a good track record. But it’s also because regulation lags pretty far behind the new, as new technologies develop. So I would say there needs to be focused on these large models, I think from the perspective of not only their outputs. It can be shown that you could game it for making offensive statements based on race, gender, nationality, disability, they’re probably not going to catch them all unless they’re actually stress tested by folks that have more access to their internals. So that’s one vector in which we could focus on them. We could also focus on the different kinds of consequences that could possibly come out of that, whether that’s around safety, whether that’s around equal access to things that may be benefits. And since we’re so early in this Chat-GPT game, you know, we’re getting these essays in which, you know, there’s, there’s, there’s fear around, you know, the college essay, or the high school essay is dead. Which, you know, I have less of concern around that, as an educator. I think that, you know, you can tell what the differences are. And I think the… to kind of buy into the narrative that you, that this is completely indistinguishable, maybe it’s giving the engineers a bit too much credit on this. But it would be about holding the companies who are generating and creating these things responsible, not the person that uses it for a downstream application, but the knowledge that there’s so much happening under the hood, that we don’t get access to, that are being used and are going to be used in so many domains, there needs to be much more transparency, there needs to be much more ability to audit, to to have folks who are not affiliated with the company to do some reporting. There is a bill working its way through US Congress called the “Algorithmic Accountability Act”, which would allow auditing to occur via the FTC and FTC technologists. But you know, even if that passes, I mean, there may be a pretty huge enforcement cap there. So it’s a pretty complicated answer to the question of “should there be regulation?” The short answer is yes. But the harder answer is how.

Kai Ryssdal

Early days yet. Very early days, no joke. Alex Hanna is the director of research at the Distributed AI Research Institute. Alex, thanks a lot for your time and your perspective. We really appreciate it.

Alex Hanna

Thanks so much, Kai. Thanks so much, Kimberly.

Kimberly Adams

Thanks. You know, every single one of these conversations about Chat-GPT and generative AI that I hear or that we’ve had, I just feel like it opens up a whole batch of new questions.

Kai Ryssdal

Yeah totally. Totally.

Kimberly Adams

You know, there’s there’s so much left to unpack. Meghan McCarty Carino did an interview with somebody over on the tech show about some of those lawsuits, and you know, artists feeling like, you know, their work is being used to feed these algorithms without their consent. And that there’s literally, not only is there no legal framework for their, you know, how to deal with their art being used to feed this algorithm without their consent, but there’s also no legal framework to see whether or not the outcome of what is generated is a copyright violation. So it’s just all completely new.

Kai Ryssdal

And it’s it’s so interesting, because somehow it feels, and look, I Chat-GPT has been around for what, like six weeks, it feels like it’s been around forever.

Kimberly Adams

Years actually. Different versions of it has been around for years. It’s the version, that’s new.

Kai Ryssdal

That’s true. That’s true. That’s a very good point. Yeah, I just… it is early days yet. It’s all such new technology, such new technology.

Kimberly Adams

Well, it’s just… I keep thinking about the tractor, you know. And these other earth shattering technologies that completely upended the way that we live and work right, you know, for tens of thousands of years of humanity, people, you know, plowed fields themselves by hand or with animals, and then we got tractors and a whole chunk of the labor force went away, right? Or went to do something else. So, you know, I always think about these sorts of major technology changes as “is it a tractor?”

Kai Ryssdal

That’s a really good way to do it, actually. It’s really good way to do it. I like that. Let us know what you think well, if you are half full, or perhaps half empty about the future of generative AI and Chat-GPT and all the rest of them because there are more coming of course. Send us your thoughts. Our phone numbers 508-827-6278, 508-U-B-SMART or you can email us, old fashioned email, makemesmart@marketplace.org. We’re coming right back. Alright, news. Kimberly Adams, you go.

Kimberly Adams

I have two stories about insurance. Because what more thrilling topic than insurance right? You know. So the first one is this story. I saw CBS and a couple of other places, but the the link I have for the show notes is CBS. Is that there are several cars that seem to be uninsurable, not right now or a version of it. So State Farm and it looks like Progressive a bit is starting to limit whether or not they will insure some Kia and Hyundai. You know, however, we’re going to say that vehicles because they’re so easy to steal. And you know…

Kai Ryssdal

Oh my god really?

Kimberly Adams

Yeah, I’ve been… one of my friends in St. Louis was telling me about this about how like this particular brand of cars keeps getting stolen, keeps getting stolen. Apparently, this has been all over local news all over the country, that, you know, thefts of particular brands of cars have really gone up because something about them, makes them easier to steal. And so I’m going to read from CBS here “StateFarm has said it will temporarily stop providing new auto insurance policies for some model years and trim levels of Hyundai and Kia vehicles in some states because of an increase in thefts of those cars.” Obviously not specifying which versions, because you know, don’t want to increase the target. Progressive has stopped writing new policies on some of these cars, according to another CBS station, but the companies are like, “Oh, we’re doing what we can to fix the cars.” But yeah, this is really interesting that your car is so easily stealable that insurance companies like “yeah, we’re not even going to bother.”

Kai Ryssdal

Not even gonna try. Wow. That’s not great.

Kimberly Adams

So that’s story number one. Story number two is a report out from the Commonwealth Fund, about how terrible US healthcare is, which is basically saying that the healthcare spending in the United States is double, nearly double what other wealthy countries spend, but our health is significantly worse. We’re more likely to have chronic conditions, we go to the doctor less frequently. And I’m going to read this list of something, let’s see “their analysis suggests that overall health in the US is worse than in any than than in other high income countries. Life expectancy at birth for the US is three years below the OECD average. The obesity rate in the US is nearly doubled the OECD average at about 43% compared to 25%. In addition, the rate of avoidable deaths in the United States was 336 deaths per 1000 people compared to 225.” Part of that is due to the level of violence in the United States. But we also have a lot more chronic conditions. 30% of us have two or more chronic conditions compared to 17% or 20% and 26% are some of the worst. But we’re we’re…. as much money as we spend on health care. We have terrible health care in this country.

Kai Ryssdal

Yeah. Yeah, we I mean, we just do. Yes, we’ve got some of the best health care in the world, but it’s terrible health care. And for the price per capita, it’s it’s embarrassingly bad.

Kimberly Adams

Yes. All right. What do you have?

Kai Ryssdal

Alright, so mines mines quick, and it’s it’s very wonky, but it’s a little bit, “huh?” So Janet Yellen has just wrapped up a trip through Africa. She wrapped it up on Friday or Saturday, and she gave an interview on Friday, that is, while she was in Johannesburg, that is just sort of this week, like yesterday morning hitting the American press. And I just wanted to highlight it because I think it’s very, one, interesting, but number two, also very “huh.” Janet Yellen, Secretary of the Treasury, former Fed chair says “persistently low inflation is going to be a continuing problem in this economy.” Now, that’s interesting for two reasons. Number one, inflation is really high, now coming down, yes. And really just like two, two and a half percent over the past six months. So inflation is moderating. But the real interesting part of that is for years and years and years and years and years, especially while Janet Yellen was chair of the Fed, inflation was well below the Feds target of 2%. And no matter what the central bank did, it could not get it to 2%.

Kimberly Adams

It would not move.

Kai Ryssdal

It could not. And in fact, I and I….

Kimberly Adams

I’ve done so many stories on this.

Kai Ryssdal

It’s crazy. When I asked her one day at a thing in Washington with with Malpass, the World Bank guy, I said, “Why is inflation so low?” I know, she looked at me and literally gave me the shruggy. She was like, “I don’t know.” So…

Kimberly Adams

I remember that because the whole room burst into laughing She was just “I don’t know”.

Kai Ryssdal

Right? And you’re like, “No, you’re it’s your job to know.” She was between Fed chair and Treasury secretary. Anyway, if we’re going back there, I think there’s gonna be some whiplash, and people are gonna be like, “wait, what and huh? And if and when the Fed gets inflation under control for this cycle, and we go back to sub 2% inflation. What is even happening out there?

Kimberly Adams

Time for some new economic models?

Kai Ryssdal

Oh my god. Anyway, that’s what we got news wise.

Kimberly Adams

Yes, so let’s do the mailbag.

Mailbag

Hi Kai and Kimberly. This is Godfrey from San Francisco. Jessie from Charleston, South Carolina. And I have a follow up question. It has me thinking and feeling a lot of things.

Kai Ryssdal

Alright, so Amy and I were talking last week about people looking for jobs using Chat-GPT to stay on topic for a second and we asked all y’all to weigh in on that. Rachel sent us this.

Rachel

Hi, my name is Rachel. I’m calling from Austin, Texas. I was listening to the episode on Friday, and I am half full on using Chat-GPT to write cover letters. Not only do I find cover letters to be completely pointless, and usually not read by the trainers. If you look at larger corporations, they’re all using AI to screen applications. So if AI is being used to screen the things that I write, why shouldn’t I be able to write things with AI? Thanks so much. Love the podcast.

Kimberly Adams

Doesn’t that just cancel each other out? Like AI writing the application, AI reading the application?

Kai Ryssdal

And how far are we from like humans not gonna be involved? I mean, look, that makes total sense and I get it. It just somehow doesn’t feel right to me.

Kimberly Adams

Yeah, I guess it’s sort of like a way to just be like, “Okay, this is a step we should probably skip in the first place and just get straight to the next step.” Because if, if the AI can basically do the step on both ends, then maybe the step is not necessary for humans at all.

Kai Ryssdal

Right, right. Right. So let’s get rid of cover letters. Their bogus anyway.

Kimberly Adams

Yes. Anyway. All right. Before we go, we’re gonna leave you with this week’s answer to the Make Me Smart Question, which is, “What is something you thought you knew but later found out you were wrong about?” And this week’s answer comes from Elva Ramirez, author of “Zero Proof 90 non alcoholic recipes for mindful drinking.”

Elva Ramirez

What’s something I thought I knew but later found out I was wrong about? The temperance drink which we now call a mocktail or a zero proof cocktail, is as old as our classic cocktails. Before researching my book, I didn’t know that temperance drink recipes appear in the very first cocktail book, which is called “A Bartender’s Guide”, and it was published by American Jerry Thomas in 1862. Mocktails evolved during Prohibition. But one of the reasons that gained a terrible reputation has to do with the 1980s when bartenders who tried to provide the drink style relied on heavy too sweet concoctions that had little to do with cocktail culture. While mocktails did come to have a bad reputation. They shouldn’t be sneered at because they were accorded respect alongside mint juleps and gin fizzes from the very start.

Kai Ryssdal

History is cool, I’m just gonna say history is cool. I also think by the way, if I could just editorialize here for one second, and I know you will disagree, Ms. Adams. I think zero proof cocktails is better than cleverage.

Kimberly Adams

Um, I’m, I’m still debating all of these things. Spirit free cocktails or spirit free. Or just you know, I don’t know. It’s because I think the definition of cocktail requires alcohol so like, adding a modifier on to a thing that it is not? I don’t know. I’m still I’m still pondering it. I haven’t come to any solutions on my you know, finish as I finish out dry January.

Kai Ryssdal

You you ponder and report back and we’ll discuss on Friday. How about that?

Kimberly Adams

Yeah. But yes. Okay,

Kai Ryssdal

Good, good, good. So whatever your thoughts on zero proof cocktails or cleverages or whatever else you want to call it. Send us your thoughts, your answer to Make Me Smart Question, your questions for Wednesday’s, whatever you like. Send us what you got. Our number is 508-8227-6278. 508-U-B-SMART.

Kimberly Adams

Make Me Smart is produced by Courtney Burgsieker. Ellen Rolfes writes our newsletter. Our intern is Antonio Barreras. Today’s program was engineered by Juan Carlos Torrado with mixing by Charlton Thorpe.

Kai Ryssdal

Ben Tolliday and Daniel Ramirez composed our theme music. Our acting senior producer is Marissa Cabrera. Bridget Bodnar is the director of podcasts. Francesca Levy is the executive director of Digital and On Demand. And Marketplace’s Vice President and General Manager is Neal Scarbrough.

Kimberly Adams

I have to say I’ve tried a large variety of spirit free cocktails, cleverages, mocktails over the last month, and many of them are quite good.

Kai Ryssdal

There you go.

None of us is as smart as all of us.

No matter how bananapants your day is, “Make Me Smart” is here to help you through it all— 5 days a week.

It’s never just a one-way conversation. Your questions, reactions, and donations are a vital part of the show. And we’re grateful for every single one.