Under Elon Musk’s leadership, Twitter faces content moderation challenges

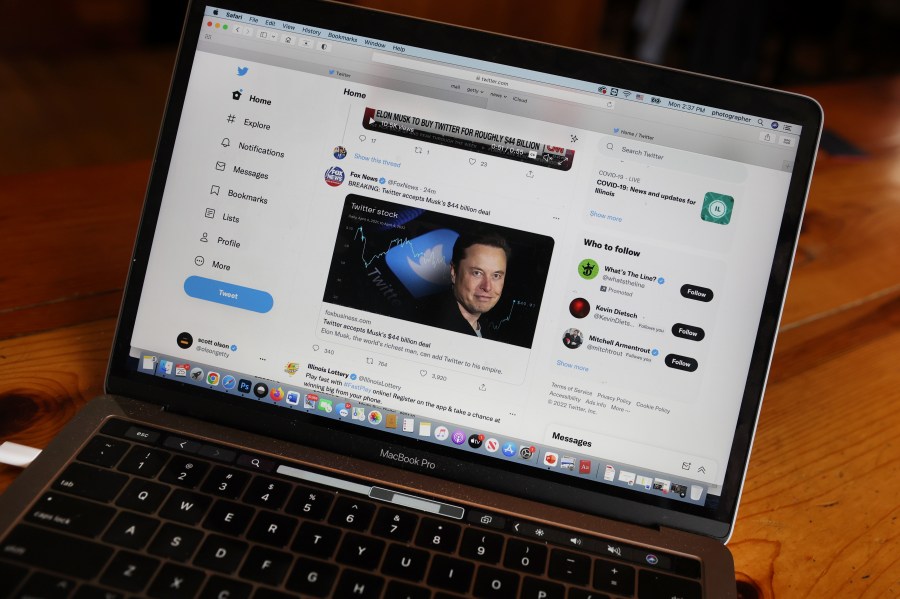

It’s an uncertain Monday at Twitter because Elon Musk has taken over and started shaking things up.

Last week, according to Bloomberg, he reassured employees that he did not plan to discard three-quarters of the staff, as he reportedly told investors earlier.

But the self-described free speech absolutist has made no secret of his desire to make some personnel cuts, particularly around content moderation.

Marketplace’s Meghan McCarty Carino spoke with Sarah Roberts, a professor and director of the Center for Critical Internet Inquiry at the University of California, Los Angeles. She worked previously on Twitter’s health research team.

She said that while content moderation is often framed as a political issue, it’s much more than that.

The following is an edited transcript of their conversation. Warning: This interview references abusive online material involving children.

Sarah Roberts: Talking about having a social media platform “without content moderation” is just an absurdity, and it’s not possible. So then the question becomes, if we know that there has to be some kind of baseline way to control material that may just not be suited for any kind of consumption, such as, let’s say, child sexual abuse material, if we acknowledge that there has to be some way to intervene upon that, then the question becomes, where does one draw the line? And Elon Musk will find that he has to draw the line or he has to have employees and staff who are trusted enough and who are knowledgeable enough in this space to make those decisions. And then he has to have people to implement it.

Meghan McCarty Carino: How labor-intensive is this work? I mean, how does it actually work?

Roberts: Typically, the generalists who work in the kind of operational day-to-day content moderation, they work for third-party labor provision firms. There’s a disproportionate amount of this work that happens in places where labor is essentially cheap. It is very poorly paid compared to any other role in tech. It’s really grueling work. If you ever thought about this and imagine that computers were doing some of the content moderation, that is true. But now in some cases, they go through and vet the decisions that an automated machine-learning algorithm, let’s say, or some other kind of [artificial intelligence] has made. More and more people have gotten involved in it, and they are evaluated on how many items they process, and they are evaluated on how accurate their decisions are. So it’s a fairly stressful job in that regard, as well.

McCarty Carino: Where AI is being used in this process, how successful has it been?

Roberts: Well, it’s definitely good at doing some things, recognizing material that has already been flagged as inappropriate or unwanted, and automatically pulling that down very quickly before it circulates. And so you know, a use case for this is again, unfortunately, child sexual exploitation material. And you know, humans don’t necessarily have to get involved in identifying it and being exposed to it. But whenever things get complex, whenever things require discernment, understanding why a symbol might be present, understanding the cultural meaning of particular turns of phrase, etc., the computer is only as good as the information that’s been programmed into it. And those things change on a dime, and those things are very culturally and locally specific. That often takes a human being to evaluate.

McCarty Carino: Right. I remember during different stages of the pandemic, you know, as information, scientific understanding, was changing quite frequently, that certain posts would get flagged, users would get, you know, kind of booted off for a while because of things that, if a human looked at it, it would be clear that that is not, you know, misinformation.

Roberts: Yeah, I mean, I think this is a great case in point where something is like breaking and changing, not only maybe daily, but, like, hourly. Claims need to be evaluated by people who have the knowledge to do it. They need to be evaluated against the internal policies and rules that a company has or against the legal mandates that they’re operating under in a particular country or region. Those are really, really complex cognitive engagements when you think about it. I mean, all of the things that come to bear? You know, how does one decide that a particular post is misinformation? What you really have to understand is context, read the information that’s being asserted, know something about the situation as it’s moving, etc.

McCarty Carino: So it sounds like humans are still the biggest backstop when it comes to content moderation.

Roberts: We have to remember that there are humans in the loop at every step of the way. So somebody had to program that algorithm, somebody tested it. People train data for it by labeling data sets. So the idea that we might be in a moment at some point in the near future where humans are not involved in this activity is totally aspirational. Humans, as a central part of this, we can expect that to carry on. I think we probably all should try to know a little bit more about what that work entails as we go forward.

Related links: More insight from Meghan McCarty Carino

Roberts wrote a book all about content moderators. It’s called “Behind the Screen: Content Moderation in the Shadows of Social Media.”

She interviewed moderators from Silicon Valley to the Philippines about the often invisible work they do and the emotional toll it takes on them.

In fact, there have been multiple lawsuits against the social media platforms for not doing enough to take care of these workers’ mental health.

YouTube this year came to a $4.3 million settlement in such a case. In one of the biggest suits so far, Facebook agreed to more than $50 million in damages in 2020.

The future of this podcast starts with you.

Every day, the “Marketplace Tech” team demystifies the digital economy with stories that explore more than just Big Tech. We’re committed to covering topics that matter to you and the world around us, diving deep into how technology intersects with climate change, inequity, and disinformation.

As part of a nonprofit newsroom, we’re counting on listeners like you to keep this public service paywall-free and available to all.

Support “Marketplace Tech” in any amount today and become a partner in our mission.